Researchers at the University of Utah need stuttering adults to help evaluate the effects of pagoclone, a drug that has shown promise in clearing stumbling speech patterns.

At most, 1 percent of the population stutters, and many people who do might be hesitant to take medication, said Michael Blomgren, an assistant professor in the department of communication sciences and disorders at the U. That has made it challenging to recruit participants in parts of the nation where the study already has begun.

"For people that stutter, it's a pretty big problem. . . . It can really be disabling," said Blomgren, who has personal experience battling the disorder.

Drug companies already have tested pagoclone for treatment of anxiety and panic disorders, but researchers found it had beneficial effects on stutterers, with no side effects.

The U. is one of 10 centers nationwide recruiting for the clinical trials.

Potential participants who complete a screening will be asked to take the investigational medication or a placebo for eight weeks, and will receive about $20 per visit. At the end of the treatment period, participants may choose to extend the study, taking the drug for 12 months free of charge.

Those who want more information should call Blomgren at 801-585-6152, or visit http://www.stutteringstudy.com. (extract from Salt Lake Tribune)

Wednesday, November 30, 2005

Call for participants

Holger Stenzel found this call for participants for the Pagoclone study. Here is the newspaper article:

Tuesday, November 29, 2005

Becoming a book reviewer

I have decided to do book reviews on stuttering. So if you have written a book on stuttering, be it research or treatment, please send me a copy and I will review the book. The first book that I will review is called Comprehensive Stuttering Therapy by Phillip J. Roberts: see here. But let me first read the book... :-)

In the mean time, here is a summary from the website:

In the mean time, here is a summary from the website:

Therapy for teenaged and adult stutterers

Comprehensive Stuttering Therapy is a stuttering therapy program that you can follow from your home and will enable you to effectively and durably control your stuttering by addressing every single element of the stuttering phenomenon.

Comprehensive Stuttering Therapy will teach you how to relax the muscles of your larynx as well as other techniques directly addressing the speech mechanism. These techniques alone can probably help you control your stuttering in the short run but are not sufficient to ensure you a long-lasting self-sustainable fluency. For this reason, Comprehensive Stuttering Therapy also includes exercises targeting the other aspects of the stuttering phenomenon.

A comprehensive approach

Stuttering is a complex condition involving much more than the disfluencies that non-stutterers notice. The bulk of the stuttering phenomenon is hidden. Stuttering affects the whole person. Stuttering includes destructive feelings, perception and emotions such as shame, embarrassment, guilt, low self-esteem, frustration and fear of particular speaking situations. The stuttering phenomenon also encompasses unusual behaviors such as irregular breathing patterns, eye contact avoidance and word substitutions.

Treating such a complex condition requires a comprehensive and holistic approach that addresses each and every aspect of the phenomenon. If one aspect of the stuttering phenomenon is left unchecked, the stutterer is very likely to relapse after a few weeks or months.

Comprehensive Stuttering Therapy is not a cure

Stuttering is not a disease and therefore cannot be cured. Stuttering is a condition that, with the help of adequate stuttering therapy, can be successfully treated and controlled. Comprehensive Stuttering Therapy, as its name implies, is a therapy and will help you control and eliminate stuttering.

Understanding stuttering

Comprehensive Stuttering Therapy provides in plain English the basic knowledge needed to be able to grasp the complexity of stuttering. Stuttering can be compared to an iceberg: 90% of the mass of an iceberg is hidden below the surface. Similarly, the bulk of the stuttering phenomenon is hidden. Non-stutterers are usually only aware of the disfluencies (the tip of the iceberg) and have no idea about what is going on 'below the surface'.

Stutterers know that stuttering means a lot more than disfluencies. Stuttering is a complex issue composed of many different elements: negative feelings and emotions, strange behaviors and misconceptions that affect every aspect of a person's life. You will learn how these different elements interact with your speech, reinforce your disfluencies and sabotage your efforts to become fluent. Stuttering obviously cannot be effectively and durably eliminated if these hidden elements are ignored. Effective stuttering therapy should consider and address the entire stuttering phenomenon.

Thursday, November 24, 2005

Politically incorrect criteria for research

In a previous post, I have listed ten criteria to judge the quality of research from the article Science and pseudoscience in communication disorders: criteria and applications. In this post, I want to explain how I judge research articles without having read them! Of course, reading them gives you extra information on the quality and is recommended. But shockingly my judgment on the quality of the research rarely changes upon reading the article. In my next posts, I will then focus again on real PDS research, because my last posts were a bit too general.

Here are my "politically incorrect" criteria to estimate quality:

1. Age and status of author. The older the researcher, the higher his seniority (e.g. being a professor), and him being first author, significantly increases the chance that the piece of research is irrelevant and wrong! There are several reasons: Older researchers or professor often become managers from their mid 40s onwards and leave the real research work to their students. Thus, they typically have not in depth thought about the research, are out of touch with new literature, and new techniques. They also live in a bubble in the sense that no-one dares to contradict them as they want to finish their PhD, get a letter of recommendation, or fear their influence on powerful research committees. Moreover, professors have often worked on one theory for 20 years, and find it emotionally very difficult to give up their theory as it means having wasted 20 years of their life on a wrong idea! Of course, there are also professor who defy this.

2. Not well known universities. The less well known the university the more likely the research and researchers are not very good. I have been at several universities during my career as a student and researcher, and there are clear differences. Of course, there are good people and research at not well known universities, but I were rarely see some.

3. Conflict of interest. If the author has a conflict of interest, the research is often suppressing weaknesses. A conflict of interest might be researchers who research the treatment approach they have themselves created, a pharmaceutical company pushing a product, a professor who is a strong supporter of a theory.

4. Too precise figure. This is a big warning sign. For example, if I read things like "74.1% of all children recover naturally", I know that the researchers are not very good scientists. This is the same as saying: we project that the building will cost 1'4637'748 Euro. The number is far too precise for the phenomena it describes. This criteria might sound trivial, but it shows that the researcher have not properly digested the number. So when I write about children stuttering, I just say 70 or 80% recover. Actually good scientists often quote LESS PRECISE figures than mediocre scientists!

5. Keyword "holistic", big name in PDS, "revolutionary", "cure". Good researchers never use vague terms. They also don't refer to big names like "Sheehan said that" or "Einstein said that". I am only interested in the argument itself and not who made the argument, at least not in a research article.

6. No quantitative background. I find that research (even if it is in soft sciences) by people who did not have a quantitative training is worse. They do not properly understand statistics and fall prey to logical fallacies much faster. During their intellectual developmental years, people with quant training, they were constantly faced with reality checks. If you miss a sign in an equation, the answer is wrong. If your thinking is sloppy, your computer code wont run. This made them aware of their own failings in terms of thinking. Good scientists constantly feel stupid and make mistakes. So when they approach subjects that are not very quantitative, they are much more suspicious and double check their thinking.

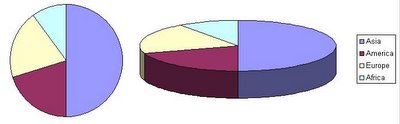

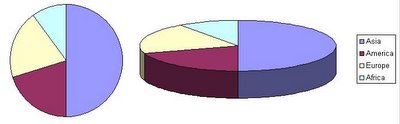

7. 3D graphs for 2D data. This is a big warning sign for me, but most people probably think I am a bit pedantic here. Often, non-scientists represent data in a very non-efficient way. For example, the share of each country in terms of population. See above, a circle would be enough to represent the data, but often a pie chart (3D) is used, even though the third dimension contains no information whatsoever. The key to finding new pattern is to only look at efficient visual representations. If you start a puzzle, you will also clean the tables and only keep the pieces and distribute them across the table.

8. No effect size is calculated. Imagine you want to find out whether people with PDS have a higher weight than the normal population. The standard procedure is to get two groups of about 20-30 people matched by age, sex, and so on, and weight them. Then for each group you have a distribution of weights, and you can perform a t-test to compare both distributions. This t-test will tell you whether the two samples could have come from the same distribution. It gives you a p-value which indicated how likely the difference between the two groups is due to a statistical fluctuation i.e. whether the difference is only by chance. Typically, if p is lower than 5% or 1%, the likelihood of the difference being by chance is 1 in 20 or 1 in 100, and researchers talk about a statistically significant difference. And almost always the story ends here. But not only it is important to know whether the two distributions are different, but also how big the difference is. For example, the weight of people with PDS might only be 100 grams more, and is therefore negligeable as an effect. Therefore the effect size is computed and this is true measure of determining whether there is a strong signal.

9. Small samples and anecdotes. Too small sample or anecdotes are always a big warning signs. UNLESS it is a detailed case study.

Here are my "politically incorrect" criteria to estimate quality:

1. Age and status of author. The older the researcher, the higher his seniority (e.g. being a professor), and him being first author, significantly increases the chance that the piece of research is irrelevant and wrong! There are several reasons: Older researchers or professor often become managers from their mid 40s onwards and leave the real research work to their students. Thus, they typically have not in depth thought about the research, are out of touch with new literature, and new techniques. They also live in a bubble in the sense that no-one dares to contradict them as they want to finish their PhD, get a letter of recommendation, or fear their influence on powerful research committees. Moreover, professors have often worked on one theory for 20 years, and find it emotionally very difficult to give up their theory as it means having wasted 20 years of their life on a wrong idea! Of course, there are also professor who defy this.

2. Not well known universities. The less well known the university the more likely the research and researchers are not very good. I have been at several universities during my career as a student and researcher, and there are clear differences. Of course, there are good people and research at not well known universities, but I were rarely see some.

3. Conflict of interest. If the author has a conflict of interest, the research is often suppressing weaknesses. A conflict of interest might be researchers who research the treatment approach they have themselves created, a pharmaceutical company pushing a product, a professor who is a strong supporter of a theory.

4. Too precise figure. This is a big warning sign. For example, if I read things like "74.1% of all children recover naturally", I know that the researchers are not very good scientists. This is the same as saying: we project that the building will cost 1'4637'748 Euro. The number is far too precise for the phenomena it describes. This criteria might sound trivial, but it shows that the researcher have not properly digested the number. So when I write about children stuttering, I just say 70 or 80% recover. Actually good scientists often quote LESS PRECISE figures than mediocre scientists!

5. Keyword "holistic", big name in PDS, "revolutionary", "cure". Good researchers never use vague terms. They also don't refer to big names like "Sheehan said that" or "Einstein said that". I am only interested in the argument itself and not who made the argument, at least not in a research article.

6. No quantitative background. I find that research (even if it is in soft sciences) by people who did not have a quantitative training is worse. They do not properly understand statistics and fall prey to logical fallacies much faster. During their intellectual developmental years, people with quant training, they were constantly faced with reality checks. If you miss a sign in an equation, the answer is wrong. If your thinking is sloppy, your computer code wont run. This made them aware of their own failings in terms of thinking. Good scientists constantly feel stupid and make mistakes. So when they approach subjects that are not very quantitative, they are much more suspicious and double check their thinking.

7. 3D graphs for 2D data. This is a big warning sign for me, but most people probably think I am a bit pedantic here. Often, non-scientists represent data in a very non-efficient way. For example, the share of each country in terms of population. See above, a circle would be enough to represent the data, but often a pie chart (3D) is used, even though the third dimension contains no information whatsoever. The key to finding new pattern is to only look at efficient visual representations. If you start a puzzle, you will also clean the tables and only keep the pieces and distribute them across the table.

8. No effect size is calculated. Imagine you want to find out whether people with PDS have a higher weight than the normal population. The standard procedure is to get two groups of about 20-30 people matched by age, sex, and so on, and weight them. Then for each group you have a distribution of weights, and you can perform a t-test to compare both distributions. This t-test will tell you whether the two samples could have come from the same distribution. It gives you a p-value which indicated how likely the difference between the two groups is due to a statistical fluctuation i.e. whether the difference is only by chance. Typically, if p is lower than 5% or 1%, the likelihood of the difference being by chance is 1 in 20 or 1 in 100, and researchers talk about a statistically significant difference. And almost always the story ends here. But not only it is important to know whether the two distributions are different, but also how big the difference is. For example, the weight of people with PDS might only be 100 grams more, and is therefore negligeable as an effect. Therefore the effect size is computed and this is true measure of determining whether there is a strong signal.

9. Small samples and anecdotes. Too small sample or anecdotes are always a big warning signs. UNLESS it is a detailed case study.

Monday, November 21, 2005

BSA Research Committee

On Saturday, I attended the tri-annual meeting of the research commitee of the British Stammering Association (BSA). In 2002, I was a trustee of the BSA and started the committe, as I felt that there should be a closer cooperation between researchers themselves and with the national association, the BSA. It was quite a struggle to get it up running and people interested in it, but now the contrary is true! :-) If you want to know more, please read here.

We voted in two new members, Dave Rowley and Sharon Millard. We also discussed the 2006 edition of the BSA Vacation Scholarship. And we will produce a 2006 Annual Review of PDS research.

We voted in two new members, Dave Rowley and Sharon Millard. We also discussed the 2006 edition of the BSA Vacation Scholarship. And we will produce a 2006 Annual Review of PDS research.

Thursday, November 17, 2005

Pseudo or not

Today, I want to tell you about a paper by Finn, Bothe, and Bramlett. The title is Science and pseudoscience in communication disorders: criteria and applications. Their aim is to make sure that clinicians can distinguish between pseudoscience and science of treatments. Here are their 10 criteria:

I think these ten criteria are useful tell-signs, and the more criteria a treatment approach fulfills the more suspicious the clinicians or patient should be about its claims. But I think it is also important to note that even if the research supporting a treatment approach can be considered scientific, this does not necessarily mean that the findings are correct. At the end of the article, they mention that they have tested several approaches and the SpeechEasy device and Fast ForWord qualify as pseudoscientific, and for example the Lidcombe approach as scientific. This is a bit ironic as I have pointed out here and here that their statistics is no quite right! But I guess the big big difference is that I am able to formulate arguments that question part of the outcome study for Liddcombe, were the study pseudo-scientific, I could not do so.

I need to read the article a bit more careful, and will report here.

1. Untestable: Is the Treatment Unable to Be Tested or Disproved?

2. Unchanged: Does the Treatment Approach Remain Unchanged Even in the Face of Contradictory Evidence?

3. Confirming Evidence: Is the Rationale for the Treatment Approach Based Only on Confirming Evidence, With Disconfirming Evidence Ignored or Minimized?

4. Anecdotal Evidence: Does the Evidence in Support of the Treatment Rely on Personal Experience and Anecdotal Accounts?

5. Inadequate Evidence: Are the Treatment Claims Incommensurate With the Level of Evidence Needed to Support Those Claims?

6. Avoiding Peer Review: Are Treatment Claims Unsupported by Evidence That Has Undergone Critical Scrutiny?

7. Disconnected: Is the Treatment Approach Disconnected From Well-Established Scientific Models or Paradigms?

8. New Terms: Is the Treatment Described by Terms That Appear to Be Scientific but Upon Further Examination Are Found Not to Be Scientific at All?

9. Grandiose Outcomes: Is the Treatment Approach Based on Grandiose Claims or Poorly Specified Outcomes?

10. Holistic: Is the Treatment Claimed to Make Sense Only Within a Vaguely Described Holistic Framework?

I think these ten criteria are useful tell-signs, and the more criteria a treatment approach fulfills the more suspicious the clinicians or patient should be about its claims. But I think it is also important to note that even if the research supporting a treatment approach can be considered scientific, this does not necessarily mean that the findings are correct. At the end of the article, they mention that they have tested several approaches and the SpeechEasy device and Fast ForWord qualify as pseudoscientific, and for example the Lidcombe approach as scientific. This is a bit ironic as I have pointed out here and here that their statistics is no quite right! But I guess the big big difference is that I am able to formulate arguments that question part of the outcome study for Liddcombe, were the study pseudo-scientific, I could not do so.

I need to read the article a bit more careful, and will report here.

Wednesday, November 09, 2005

Stutter and make money!

For all those women who stutter, here is the perfect business opportunity for you to make money... Got the link from Einar's blog.

If you are nervous about the job interview and need some practise, I will be happy to provide Einar's phone number! :-)

If you are nervous about the job interview and need some practise, I will be happy to provide Einar's phone number! :-)

Tuesday, November 08, 2005

How much is false?

I nearly finished this post and my browser crashed!!! I hate software (and of course myself for being so terribly human and not saving work...)

In my last post, I spoke about an article by John Ioannidis in which he proposes tell-signs for an increased likelihood of false research results. Before launching myself into a discussion on whether PDS research shares most of these tell-signs, I want to make you aware of another similar but PDS specific article by Finn, Bothe, and Bramlett. The title is Science and pseudoscience in communication disorders: criteria and applications. I met Patrick Finn once in Montreal, and once in Tucson (Arizona). I was at a conference on human consciousness (much more interesting than PDS) and we had lunch in a Greek restaurant. I never met Dr Bothe, but I know that she has written an article some years ago in which she basically said that most (or was it all?) research on treatment of PDS is false. So this article fits well into her agenda. :-) I will have a look at their article and give my feedback on the blog. But here is already the abstract:

So, let me come back to Ionannidis' tell-signs. I will go through all of them, and discuss whether they are relevant to PDS research. Research is less likely to be true..

a) when the studies conducted in a field are smaller; YES. Most research studies have quite small sample sizes.

b) when effect sizes are smaller; NA. Actually most research does not include effect sizes! I even have the impression that many researchers are not aware of the effect size concept. Everyone seems to compute the statistical significance of their results (for example group difference between people with PDS and control subjects), but rarely whether the results like group differences are negligible or large. Crash course in stats: Statistical significance states how likely a result is not a coincidence but a true effect. Effect size states how large the effect is. Thus, you have have a statistical significance, but the effect might be too small to be interesting.

c) when there is a greater number and lesser preselection of tested relationships; sometimes YES. This situation does happen in some studies. For example, if all aspects of a subject are recorded and search for correlation with outcome. And the more variables you have the more likely you have a statistically significant result by chance.

d) where there is greater flexibility in designs, definitions, outcomes, and analytical modes; big YES. Due to the very nature of PDS, your creativity is your limit. Definitions are vague, e.g. severity of stuttering, success in therapy.

e) when there is greater financial and other interest and prejudice; NO and YES. Generally, there are no great financial interest in PDS research, except of course drug trails. Prejudice certainly exists, but not in the sense of ignoring blatantly obvious facts but more falling victim of logical fallacies and group thinking in the absence of blatantly obvious facts.

f) when more teams are involved in a scientific field in chase of statistical significance; MAYBE.

PDS research has many of these tell-signs. In my next post, I will tell you more about Tom's tell-sign of bad research articles.

In my last post, I spoke about an article by John Ioannidis in which he proposes tell-signs for an increased likelihood of false research results. Before launching myself into a discussion on whether PDS research shares most of these tell-signs, I want to make you aware of another similar but PDS specific article by Finn, Bothe, and Bramlett. The title is Science and pseudoscience in communication disorders: criteria and applications. I met Patrick Finn once in Montreal, and once in Tucson (Arizona). I was at a conference on human consciousness (much more interesting than PDS) and we had lunch in a Greek restaurant. I never met Dr Bothe, but I know that she has written an article some years ago in which she basically said that most (or was it all?) research on treatment of PDS is false. So this article fits well into her agenda. :-) I will have a look at their article and give my feedback on the blog. But here is already the abstract:

PURPOSE: The purpose of this tutorial is to describe 10 criteria that may help clinicians distinguish between scientific and pseudoscientific treatment claims. The criteria are illustrated, first for considering whether to use a newly developed treatment and second for attempting to understand arguments about controversial treatments. METHOD: Pseudoscience refers to claims that appear to be based on the scientific method but are not. Ten criteria for distinguishing between scientific and pseudoscientific treatment claims are described. These criteria are illustrated by using them to assess a current treatment for stuttering, the SpeechEasy device. The authors read the available literature about the device and developed a consensus set of decisions about the 10 criteria. To minimize any bias, a second set of independent judges evaluated a sample of the same literature. The criteria are also illustrated by using them to assess controversies surrounding 2 treatment approaches: Fast ForWord and facilitated communication. CONCLUSIONS: Clinicians are increasingly being held responsible for the evidence base that supports their practice. The power of these 10 criteria lies in their ability to help clinicians focus their attention on the credibility of that base and to guide their decisions for recommending or using a treatment.

So, let me come back to Ionannidis' tell-signs. I will go through all of them, and discuss whether they are relevant to PDS research. Research is less likely to be true..

a) when the studies conducted in a field are smaller; YES. Most research studies have quite small sample sizes.

b) when effect sizes are smaller; NA. Actually most research does not include effect sizes! I even have the impression that many researchers are not aware of the effect size concept. Everyone seems to compute the statistical significance of their results (for example group difference between people with PDS and control subjects), but rarely whether the results like group differences are negligible or large. Crash course in stats: Statistical significance states how likely a result is not a coincidence but a true effect. Effect size states how large the effect is. Thus, you have have a statistical significance, but the effect might be too small to be interesting.

c) when there is a greater number and lesser preselection of tested relationships; sometimes YES. This situation does happen in some studies. For example, if all aspects of a subject are recorded and search for correlation with outcome. And the more variables you have the more likely you have a statistically significant result by chance.

d) where there is greater flexibility in designs, definitions, outcomes, and analytical modes; big YES. Due to the very nature of PDS, your creativity is your limit. Definitions are vague, e.g. severity of stuttering, success in therapy.

e) when there is greater financial and other interest and prejudice; NO and YES. Generally, there are no great financial interest in PDS research, except of course drug trails. Prejudice certainly exists, but not in the sense of ignoring blatantly obvious facts but more falling victim of logical fallacies and group thinking in the absence of blatantly obvious facts.

f) when more teams are involved in a scientific field in chase of statistical significance; MAYBE.

PDS research has many of these tell-signs. In my next post, I will tell you more about Tom's tell-sign of bad research articles.

Sunday, November 06, 2005

Theorists and reality

If you are a regular reader of my blog, you will surely have noticed that I am more of a theorist/scientist than a practician! To my benefit, I can claim that I have been practising since the age of 3, and have become really good at stuttering! But of course I have very little therapist experience, in fact none. I attended many group meetings, talked to therapists, or read relevant literature. So I guess I fairly well have experienced most aspects of PDS in adults (but not from the side of a therapist), but my greatest lack in experience is disfluent children. I have never really seen any, or talked to them.

So last Friday, I had some second-hand experience on what it is like to be a parent that has a disfluent child. At the local swimming pool, I met an old friend of mine, and his daughter (had) stuttered. He told me that she had stuttered for some months, and they took her to a speech and language therapist, who didnt really do anything except tell them that they should not judge her speech and that she should start first grade earlier (in Luxembourg it is possible to delay first grade by a year if you are close the the age limit). Then three days before she went to school, she suddenly stopped stuttering, and only stutters occasionally but far less. I find this sudden recovery (if it proves to be permament) amazing. Why is this happen so sudden? I always had been in the back of mind, the knowledge that children suddenly start stuttering and suddenly stopped stuttering. But hearing it first-hand from my friend, makes this phenonema more important. How can this fit with my view that PDS starts with a defect and recovery is compensation within the brain. How could this happen so suddenly? I asked a similar question here with an answer by Prof Bosshardt (here). I can understand that stuttering can suddenly start but that is suddenly stops is a bit too much for me. This would suggest to me that levels of neurotransmitters might be relevant. I need to think more. Also, is it really so sudden or is this just parents approximating reality into more neatly defined periods.

Oh I forgot, my friend also said that a few days before his daughter's recovery he decided to repeat every single sentence in the same ways that she had spoken it for one day. He said that this made her aware how she spoke, but he didnt know whether this has anything to do with her recovery.

So last Friday, I had some second-hand experience on what it is like to be a parent that has a disfluent child. At the local swimming pool, I met an old friend of mine, and his daughter (had) stuttered. He told me that she had stuttered for some months, and they took her to a speech and language therapist, who didnt really do anything except tell them that they should not judge her speech and that she should start first grade earlier (in Luxembourg it is possible to delay first grade by a year if you are close the the age limit). Then three days before she went to school, she suddenly stopped stuttering, and only stutters occasionally but far less. I find this sudden recovery (if it proves to be permament) amazing. Why is this happen so sudden? I always had been in the back of mind, the knowledge that children suddenly start stuttering and suddenly stopped stuttering. But hearing it first-hand from my friend, makes this phenonema more important. How can this fit with my view that PDS starts with a defect and recovery is compensation within the brain. How could this happen so suddenly? I asked a similar question here with an answer by Prof Bosshardt (here). I can understand that stuttering can suddenly start but that is suddenly stops is a bit too much for me. This would suggest to me that levels of neurotransmitters might be relevant. I need to think more. Also, is it really so sudden or is this just parents approximating reality into more neatly defined periods.

Oh I forgot, my friend also said that a few days before his daughter's recovery he decided to repeat every single sentence in the same ways that she had spoken it for one day. He said that this made her aware how she spoke, but he didnt know whether this has anything to do with her recovery.

Friday, November 04, 2005

Is most PDS research false?

You should have a look at John Ioannidis' article published on PloS Biology, the free access science journals: here. He claims that most research findings, especially those in fields similar to PDS, are wrong:

A lof of PDS research satifies nearly all of these criteria. In my next post I will go through the various arguments.

There is increasing concern that most current published research findings are false. The probability that a research claim is true may depend on study power and bias, the number of other studies on the same question, and, importantly, the ratio of true to no relationships among the relationships probed in each scientific field. In this framework, a research finding is less likely to be true when the studies conducted in a field are smaller; when effect sizes are smaller; when there is a greater number and lesser preselection of tested relationships; where there is greater flexibility in designs, definitions, outcomes, and analytical modes; when there is greater financial and other interest and prejudice; and when more teams are involved in a scientific field in chase of statistical significance. Simulations show that for most study designs and settings, it is more likely for a research claim to be false than true. Moreover, for many current scientific fields, claimed research findings may often be simply accurate measures of the prevailing bias. In this essay, I discuss the implications of these problems for the conduct and interpretation of research. (found link on Lubos Motl's blog)

A lof of PDS research satifies nearly all of these criteria. In my next post I will go through the various arguments.

Tuesday, November 01, 2005

Even Neo-Nazis and Anarchists stutter

Over the weekend I was in Goettingen (Germany). I met up with Dr. Martin Sommer for Sunday lunch. He is main author of the Lancet article that reports structural anomalies in the brain of people with PDS for the first time. Check also this summary on PDS. This has now been more or less confirmed by research from Foundas, Jaencke, and Watkins in principle, but the location varies a bit. He is currently finishing his Habilitation, which is a bit like a super PhD thesis that qualifies you to become professor at a German university. He has been quite busy with his clinical work and research in other areas, and even asked me to update him on the latest research. I suggested he should read my blog! :-) A PhD student of his is doing work on PDS, but he said that it is too early to talk about. He also said that for the defence of his Habilitation thesis he will have to review all the latest research. We talked about many things, but not very detailed: 2-loop theory, evolutionary disadvantage of people who stutter, psychological/Freudian theories (which we thought can only be helpful for secondary symptoms), drugs (see here). We also spoke about speech therapies. Martin also has PDS, and we exchanged ideas and our experiences, and we spoke about the research work I did and might be doing soon on the data and therapy of the Kassel Stuttering Therapy.

My stay in Goettingen has been interesting in another way: here. That Saturday the NPD, an extreme right-wing political party (close to neo-Nazis) had an authorised march through the city, and left-wing anarchists (mainly from Berlin and Hamburg) decided to block the march by any means. Unknown to me, their romantic encounter happened in the street and neighbourhood where I stayed the night! I arrived at 4 in Goettingen and the whole place was blocked with literally thousands of policemen. After 2 hours of driving around to evade police road blocks, I left the car in a car park at the train station, and made my way on foot. At that time, passions had calmed, apart possibly from a punkish old leftish woman complaining to the German railways about their inefficient services! It was a bit spooky and surreal atmosphere as I crossed police lines, burned-out trash containers, and broken street signs. Half another hour later, town council worked had cleaned everything, and Goettingen looked again like a peaceful German version of Cambridge or Oxford.

Writing this blog also means trying to find PDS in anything that moves. So I guess even neo-Nazis and anarchists stutter. I am wondering whether there are some famous bad guys in history or now that stutter?? You always only see positively famous people who stutter. I know one "bad" guy who stuttered: the sprinter Ben Johnson. Interestingly enough, he was never included in the Famous People who Stutter list. And he is/was pretty famous, beating Carl Lewis in 100 meters with world record, only to be found guilty of performance-enhancing drugs. I saw a TV documentary where he speaks/stutters and says that he stutters since the age of 4. If you know any bad guys who stuttered, let me know! After all, people with PDS are not worse but also not better than others!

Subscribe to:

Posts (Atom)